Nowadays, complex orchestrators and CI/CD are essential for software development, but as a result, a feature has a long way to go from a commit to testing and delivery before it reaches production.

Previously, developers used to upload new files to a server via FTP, so deployment took a few seconds. But now we have to create a merge request and wait a long time for a feature to get to the users.

Building Docker images, which is a part of this process, might take up to a few dozens of minutes. This is hardly acceptable. In this article, we'll dockerize a simple application, then use several methods for speeding up build time and consider their nuances.

Our team successfully created and now supports several media websites. For example, among our projects are websites for such Russian media outlets as TASS, The Bell, Novaya Gazeta, and Republic. Recently, we were deploying the Reminder website to production, and while we were adding new features and fixing old bugs, slow deployment became a big problem.

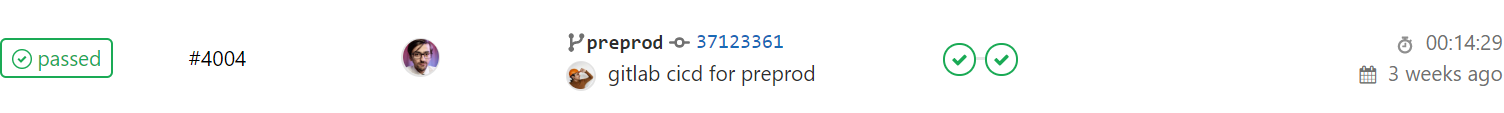

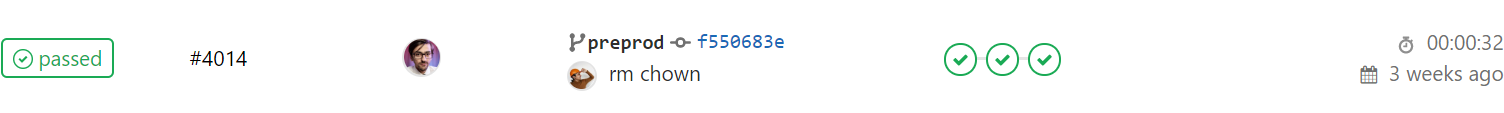

We use GitLab for deployment: we build Docker images, push them to our Container Registry and deploy our container images to production. Building Docker images is the longest process on this list. For example, it took 14 minutes to build each non-optimized backend image.

Something had to be done about that. We decided to figure out why building Docker images took so long and how to fix the situation. As a result, we were able to reduce build time to 30 seconds!

To make our example more or less universal, we will create an empty Angular application:

ng n app

Let’s add PWA support to it (we are progressive, aren't we?):

ng add @angular/pwa --project app

While all those tons of NPM packages are being downloaded, let's talk about what a Docker image is. Docker makes it possible to package applications and run them in an isolated environment called a container. Thanks to such isolation, you can run multiple containers on a single server at the same time. Unlike virtual machines, Docker containers run directly on the kernel, so they are more lightweight. Before running a dockerized application, we build a Docker image into which we package everything needed for our application to function. A Docker image is like a cast of a file system. For example, let's take this Dockerfile:

FROM node:12.16.2

WORKDIR /app

COPY . .

RUN npm ci

RUN npm run build --prod

A Dockerfile is a set of instructions. Docker executes these instructions step by step and saves the changes to the file system, adding them to the previous ones. Each command creates its own layer. A finished Docker image is all these layers combined together.

It's important to know that Docker caches each layer. If nothing has changed since the previous build, Docker will use the completed layers instead of executing the commands. The main increase in the build speed is due to effective cache usage, so we will focus on the speed of building Docker images with a ready cache when measuring build speed. Let’s go step by step:

1. First, we need to delete images locally so that previous runs do not affect the test.

docker rmi $(docker images -q)

2. Next, let’s run our build for the first time.

time docker build -t app .

3. Now we change src/index.html, simulating the work of a programmer.

4. Then we run the build for the second time.

time docker build -t app .

If we set up our build environment correctly (see details below), Docker will already have a bunch of caches when starting to build images. Our goal is to learn how to utilize the cache so that the build process is performed as quickly as possible. Since we don't utilize the cache the first time we build our image, we can ignore how slow it was. In testing, we are interested in the second run of the build, when our caches are already warmed up and we are ready to bake the cake — build the image. However, applying some tips will affect the first build too.

Let’s put the Dockerfile described above in the project folder and run the build process. All listings are shortened for readability.

$ time docker build -t app .

Sending build context to Docker daemon 409MB

Step 1/5 : FROM node:12.16.2

Status: Downloaded newer image for node:12.16.2

Step 2/5 : WORKDIR /app

Step 3/5 : COPY . .

Step 4/5 : RUN npm ci

added 1357 packages in 22.47s

Step 5/5 : RUN npm run build --prod

Date: 2020-04-16T19:20:09.664Z - Hash: fffa0fddaa3425c55dd3 - Time: 37581ms

Successfully built c8c279335f46

Successfully tagged app:latest

real 5m4.541s

user 0m0.000s

sys 0m0.000s

Then we must change the contents of src/index.html and run it for the second time.

$ time docker build -t app .

Sending build context to Docker daemon 409MB

Step 1/5 : FROM node:12.16.2

Step 2/5 : WORKDIR /app

---> Using cache

Step 3/5 : COPY . .

Step 4/5 : RUN npm ci

added 1357 packages in 22.47s

Step 5/5 : RUN npm run build --prod

Date: 2020-04-16T19:26:26.587Z - Hash: fffa0fddaa3425c55dd3 - Time: 37902ms

Successfully built 79f335df92d3

Successfully tagged app:latest

real 3m33.262s

user 0m0.000s

sys 0m0.000s

Now we execute the docker images command to see if our image has been successfully created:

REPOSITORY TAG IMAGE ID CREATED SIZE

app latest 79f335df92d3 About a minute ago 1.74GB

Before the build process starts, Docker takes all the files in the current build context and sends them to the Docker daemon: Sending build context to Docker daemon 409MB. The build context is indicated by the last build command argument — in our case, it is a period (.), which means that Docker will take all files from the current folder. But 409MB is a lot, so let's think about how to fix this situation.

Reducing build context size

There are two ways to reduce context size:

- We can put all the files needed for the build in a separate folder and point Docker to it. It's not always convenient.

- We can exclude files that are not needed for the build by adding a .dockerignore file to the context directory:

.git

/node_modules

Let's build our image again:

$ time docker build -t app .

Sending build context to Docker daemon 607.2kB

Step 1/5 : FROM node:12.16.2

Step 2/5 : WORKDIR /app

---> Using cache

Step 3/5 : COPY . .

Step 4/5 : RUN npm ci

added 1357 packages in 22.47s

Step 5/5 : RUN npm run build --prod

Date: 2020-04-16T19:33:54.338Z - Hash: fffa0fddaa3425c55dd3 - Time: 37313ms

Successfully built 4942f010792a

Successfully tagged app:latest

real 1m47.763s

user 0m0.000s

sys 0m0.000s

Yes, 607.2KB is much better than 409MB. And we also reduced the image size from 1.74GB to 1.38GB:

REPOSITORY TAG IMAGE ID CREATED SIZE

app latest 4942f010792a 3 minutes ago 1.38GB

Now we will try to reduce the image size even more.

Using Alpine Linux

Another way to keep your Docker image size down is to use a small parent image. A parent image is the image that our image is based on. The lowest layer is indicated with the FROM command in a Dockerfile. In our case, we’ll use an image based on Ubuntu with Node.js already installed. But it’s almost 1GB (a monstrous size!).

$ docker images -a | grep node

node 12.16.2 406aa3abbc6c 17 minutes ago 916MB

We can dramatically reduce the image size if we use an image based on Alpine Linux. Alpine Linux is an extremely lightweight Linux distribution. An Alpine image for Node.js is only 88.5MB! So let's replace our big image with the smaller one:

FROM node:12.16.2-alpine3.11

RUN apk --no-cache --update --virtual build-dependencies add

python

make

g++

WORKDIR /app

COPY . .

RUN npm ci

RUN npm run build --prod

We had to install a few things that are also needed to build our application. Yes, Angular won’t build without Python. ¯(°_o)/¯

But it was worth it — we reduced the image size by 619MB:

REPOSITORY TAG IMAGE ID CREATED SIZE

app latest aa031edc315a 22 minutes ago 761MB

Let's go even further.

Using multi-stage builds

We will take from our image only what is actually needed in production. This is what we have now:

$ docker run app ls -lah

total 576K

drwxr-xr-x 1 root root 4.0K Apr 16 19:54 .

drwxr-xr-x 1 root root 4.0K Apr 16 20:00 ..

-rwxr-xr-x 1 root root 19 Apr 17 2020 .dockerignore

-rwxr-xr-x 1 root root 246 Apr 17 2020 .editorconfig

-rwxr-xr-x 1 root root 631 Apr 17 2020 .gitignore

-rwxr-xr-x 1 root root 181 Apr 17 2020 Dockerfile

-rwxr-xr-x 1 root root 1020 Apr 17 2020 README.md

-rwxr-xr-x 1 root root 3.6K Apr 17 2020 angular.json

-rwxr-xr-x 1 root root 429 Apr 17 2020 browserslist

drwxr-xr-x 3 root root 4.0K Apr 16 19:54 dist

drwxr-xr-x 3 root root 4.0K Apr 17 2020 e2e

-rwxr-xr-x 1 root root 1015 Apr 17 2020 karma.conf.js

-rwxr-xr-x 1 root root 620 Apr 17 2020 ngsw-config.json

drwxr-xr-x 1 root root 4.0K Apr 16 19:54 node_modules

-rwxr-xr-x 1 root root 494.9K Apr 17 2020 package-lock.json

-rwxr-xr-x 1 root root 1.3K Apr 17 2020 package.json

drwxr-xr-x 5 root root 4.0K Apr 17 2020 src

-rwxr-xr-x 1 root root 210 Apr 17 2020 tsconfig.app.json

-rwxr-xr-x 1 root root 489 Apr 17 2020 tsconfig.json

-rwxr-xr-x 1 root root 270 Apr 17 2020 tsconfig.spec.json

-rwxr-xr-x 1 root root 1.9K Apr 17 2020 tslint.json

Using docker run app ls -lah, we ran a container based on our app image and executed the ls -lah command, then our container exited.

For production, we need only the dist folder. Also, we need to send files out somehow. We can run some kind of Node.js HTTP server, but there is an easier way. We take our image with Nginx and put the dist folder and the small config file below in it:

server {

listen 80 default_server;

server_name localhost;

charset utf-8;

root /app/dist;

location / {

try_files $uri $uri/ /index.html;

}

}

We'll do this by using multi-stage builds. Let's change our Dockerfile:

FROM node:12.16.2-alpine3.11 as builder

RUN apk --no-cache --update --virtual build-dependencies add

python

make

g++

WORKDIR /app

COPY . .

RUN npm ci

RUN npm run build --prod

FROM nginx:1.17.10-alpine

RUN rm /etc/nginx/conf.d/default.conf

COPY nginx/static.conf /etc/nginx/conf.d

COPY --from=builder /app/dist/app .

Now we have two FROM commands, and each one begins its own stage of the build process. We named our first stage builder, and the second one starts the process of creating our final image. In the last step we copy the artifact from the builder stage to our final Nginx image. The image size has reduced significantly:

REPOSITORY TAG IMAGE ID CREATED SIZE

app latest 2c6c5da07802 29 minutes ago 36MB

Let's run our image as a container and make sure everything works:

docker run -p8080:80 app

With the -p8080:80 option, we forwarded port 8080 of our host machine to port 80 of the container in which Nginx is running. Now we open http://localhost:8080/ in a browser and see our application. It works!

Reducing the size of the image from 1.74GB to 36MB greatly shortens the delivery time of an application into production. But let's get back to build time.

$ time docker build -t app .

Sending build context to Docker daemon 608.8kB

Step 1/11 : FROM node:12.16.2-alpine3.11 as builder

Step 2/11 : RUN apk --no-cache --update --virtual build-dependencies add python make g++

---> Using cache

Step 3/11 : WORKDIR /app

---> Using cache

Step 4/11 : COPY . .

Step 5/11 : RUN npm ci

added 1357 packages in 47.338s

Step 6/11 : RUN npm run build --prod

Date: 2020-04-16T21:16:03.899Z - Hash: fffa0fddaa3425c55dd3 - Time: 39948ms

---> 27f1479221e4

Step 7/11 : FROM nginx:stable-alpine

Step 8/11 : WORKDIR /app

---> Using cache

Step 9/11 : RUN rm /etc/nginx/conf.d/default.conf

---> Using cache

Step 10/11 : COPY nginx/static.conf /etc/nginx/conf.d

---> Using cache

Step 11/11 : COPY --from=builder /app/dist/app .

Successfully built d201471c91ad

Successfully tagged app:latest

real 2m17.700s

user 0m0.000s

sys 0m0.000s

Changing the order of layers

Docker cached the first three steps (Using cache). In the fourth step, all project files are copied, and in the fifth step, npm ci installs dependencies, which takes a whole 47.338 seconds. Why do we need to re-install dependencies each time if they change very rarely? Let's see why they haven't been cached. The thing is that Docker checks layer by layer to see if the command and the files associated with it have changed. In the fourth step, we copy all the files of our project, and some of them have been changed. This is why Docker doesn't use a cached version of not only this layer but the following ones as well! Let's make a few changes to our Dockerfile.

FROM node:12.16.2-alpine3.11 as builder

RUN apk --no-cache --update --virtual build-dependencies add

python

make

g++

WORKDIR /app

COPY package*.json ./

RUN npm ci

COPY . .

RUN npm run build --prod

FROM nginx:1.17.10-alpine

RUN rm /etc/nginx/conf.d/default.conf

COPY nginx/static.conf /etc/nginx/conf.d

COPY --from=builder /app/dist/app .

First, package.json and package-lock.json are copied, then dependencies are installed, and only after that the whole project is copied. As a result:

$ time docker build -t app .

Sending build context to Docker daemon 608.8kB

Step 1/12 : FROM node:12.16.2-alpine3.11 as builder

Step 2/12 : RUN apk --no-cache --update --virtual build-dependencies add python make g++

---> Using cache

Step 3/12 : WORKDIR /app

---> Using cache

Step 4/12 : COPY package*.json ./

---> Using cache

Step 5/12 : RUN npm ci

---> Using cache

Step 6/12 : COPY . .

Step 7/12 : RUN npm run build --prod

Date: 2020-04-16T21:29:44.770Z - Hash: fffa0fddaa3425c55dd3 - Time: 38287ms

---> 1b9448c73558

Step 8/12 : FROM nginx:stable-alpine

Step 9/12 : WORKDIR /app

---> Using cache

Step 10/12 : RUN rm /etc/nginx/conf.d/default.conf

---> Using cache

Step 11/12 : COPY nginx/static.conf /etc/nginx/conf.d

---> Using cache

Step 12/12 : COPY --from=builder /app/dist/app .

Successfully built a44dd7c217c3

Successfully tagged app:latest

real 0m46.497s

user 0m0.000s

sys 0m0.000s

The process took 46 seconds instead of 3 minutes, which is much better! It is important to arrange layers in the correct order: first we copy non-changing layers, then those that change rarely and, in the end, those that change often.

Next, let’s talk a little about building Docker images in CI/CD systems.

Using previous images as a cache source

If we use some kind of SaaS solution for building our Docker images, the local docker cache may be absolutely empty. We need to give Docker an image from the previous build, so that it gets ready layers.

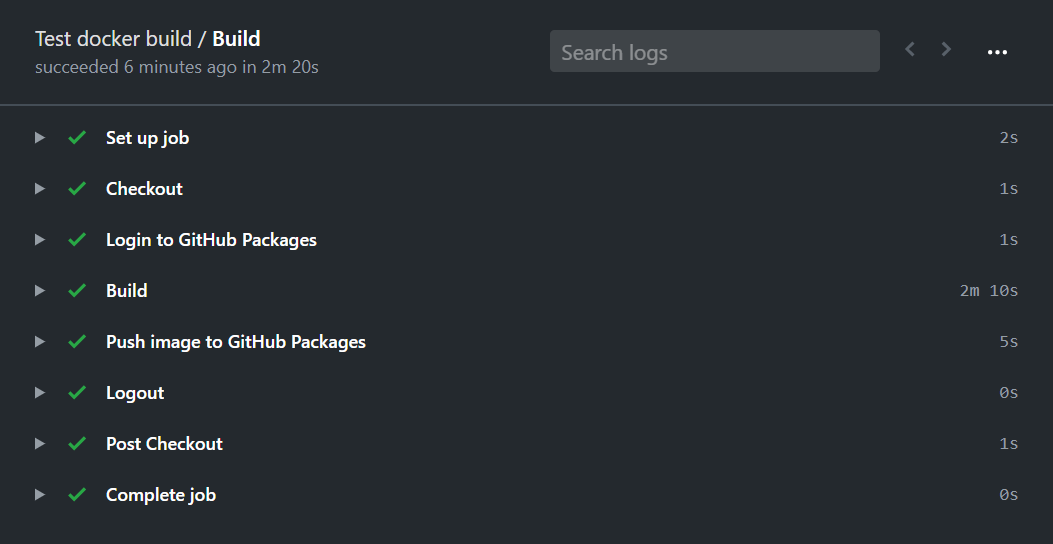

For example, let’s consider building our applications in GitHub Actions. We will use the following config file:

on:

push:

branches:

- master

name: Test docker build

jobs:

deploy:

name: Build

runs-on: ubuntu-latest

env:

IMAGE_NAME: docker.pkg.github.com/${{ github.repository }}/app

IMAGE_TAG: ${{ github.sha }}

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Login to GitHub Packages

env:

TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

docker login docker.pkg.github.com -u $GITHUB_ACTOR -p $TOKEN

- name: Build

run: |

docker build \

-t $IMAGE_NAME:$IMAGE_TAG \

-t $IMAGE_NAME:latest \

.

- name: Push image to GitHub Packages

run: |

docker push $IMAGE_NAME:latest

docker push $IMAGE_NAME:$IMAGE_TAG

- name: Logout

run: |

docker logout docker.pkg.github.comBuilding and pushing our image to GitHub Packages took 2 minutes and 20 seconds:

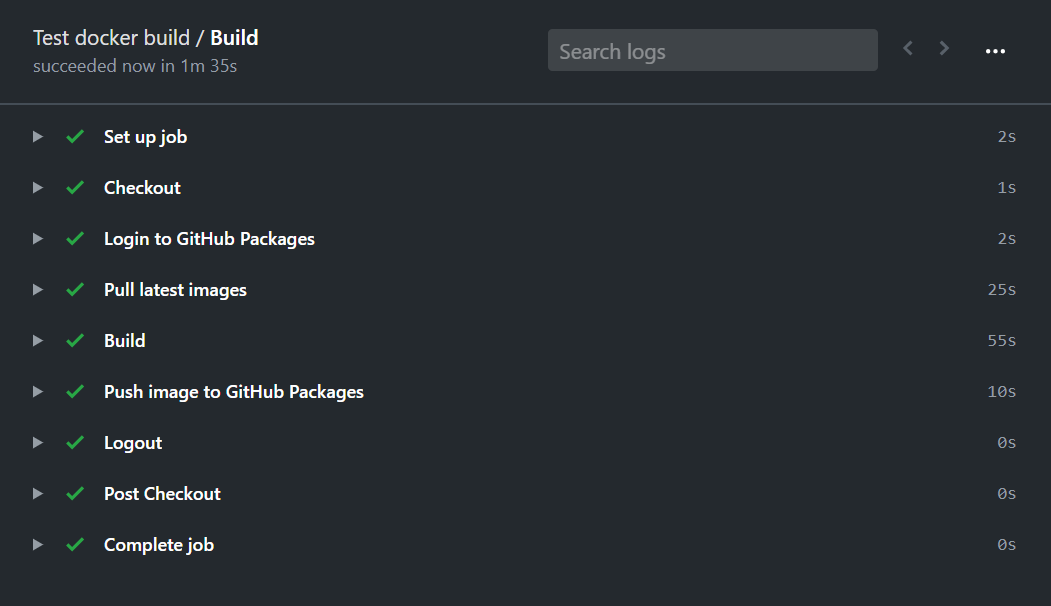

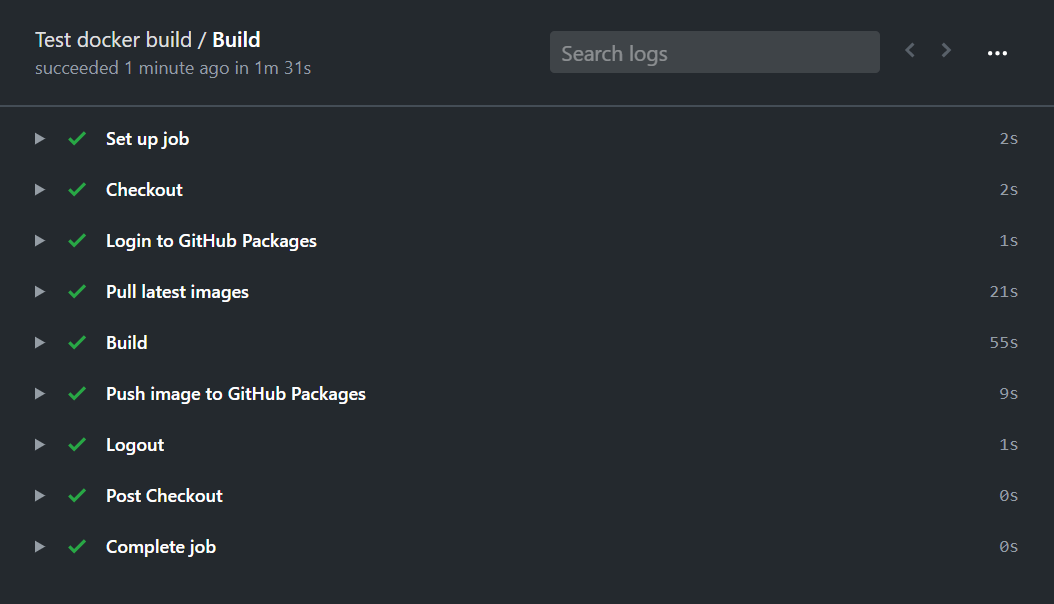

Now we’ll change the build configuration so that Docker will use the cached layers from the previous steps:

on:

push:

branches:

- master

name: Test docker build

jobs:

deploy:

name: Build

runs-on: ubuntu-latest

env:

IMAGE_NAME: docker.pkg.github.com/${{ github.repository }}/app

IMAGE_TAG: ${{ github.sha }}

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Login to GitHub Packages

env:

TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

docker login docker.pkg.github.com -u $GITHUB_ACTOR -p $TOKEN

- name: Pull latest images

run: |

docker pull $IMAGE_NAME:latest || true

docker pull $IMAGE_NAME-builder-stage:latest || true

- name: Images list

run: |

docker images

- name: Build

run: |

docker build \

--target builder \

--cache-from $IMAGE_NAME-builder-stage:latest \

-t $IMAGE_NAME-builder-stage \

.

docker build \

--cache-from $IMAGE_NAME-builder-stage:latest \

--cache-from $IMAGE_NAME:latest \

-t $IMAGE_NAME:$IMAGE_TAG \

-t $IMAGE_NAME:latest \

.

- name: Push image to GitHub Packages

run: |

docker push $IMAGE_NAME-builder-stage:latest

docker push $IMAGE_NAME:latest

docker push $IMAGE_NAME:$IMAGE_TAG

- name: Logout

run: |

docker logout docker.pkg.github.comHere we must explain why we need two build commands. The thing is that in multi-stage builds, the resulting image is a set of layers from the last stage. The layers of the previous stages are not included in the image. Therefore, when using the final image from the previous build, Docker will not be able to find ready layers for building an image with Node.js (the builder stage). In order to solve this problem, we create an intermediate image, $IMAGE_NAME-builder-stage, and send it to GitHub Packages so that it can serve as a cache source for the subsequent build.

The total build time was reduced to 1.5 minutes. Half a minute was spent on pulling the previous images.

Using pre-built Docker images

Another way to solve the problem of a clear Docker cache is to move some of the layers to another Dockerfile, build that image separately, push it to the Container Registry, and use it as a parent image.

Let's create our Node.js image for building an Angular application. First, we create a Dockerfile.node in our project:

FROM node:12.16.2-alpine3.11

RUN apk --no-cache --update --virtual build-dependencies add

python

make

g++

Then we build and push our public image to Docker Hub:

docker build -t exsmund/node-for-angular -f Dockerfile.node .

docker push exsmund/node-for-angular:latest

Now we use the finished image in our main Dockerfile:

FROM exsmund/node-for-angular:latest as builder

...

In this example, the build time has not decreased, but pre-built images can be useful if you have many projects and you have to put the same dependencies in all of them.

In this article, we’ve examined several methods to speed up building Docker images. If you want to deploy faster, you can try:

- reducing context;

- using small parent images;

- using multi-stage builds;

- reordering commands in your Dockerfile in order to utilize the cache efficiently;

- configuring a cache source in CI/CD systems;

- using pre-built Docker images.

I hope these examples clarify how Docker works, and you will be able to optimize your deployment using my tips. If you want to experiment with the examples from this article, here is the link for the repository: https://github.com/devopsprodigy/test-docker-build.